Reference to article: https://nime.pubpub.org/pub/in6wsc9t/release/1

There are more and more forms of AI in different fields. From assistants on websites, to online bots (cleverbot) and including the famous voice assistants on phones (Alexa, Siri).

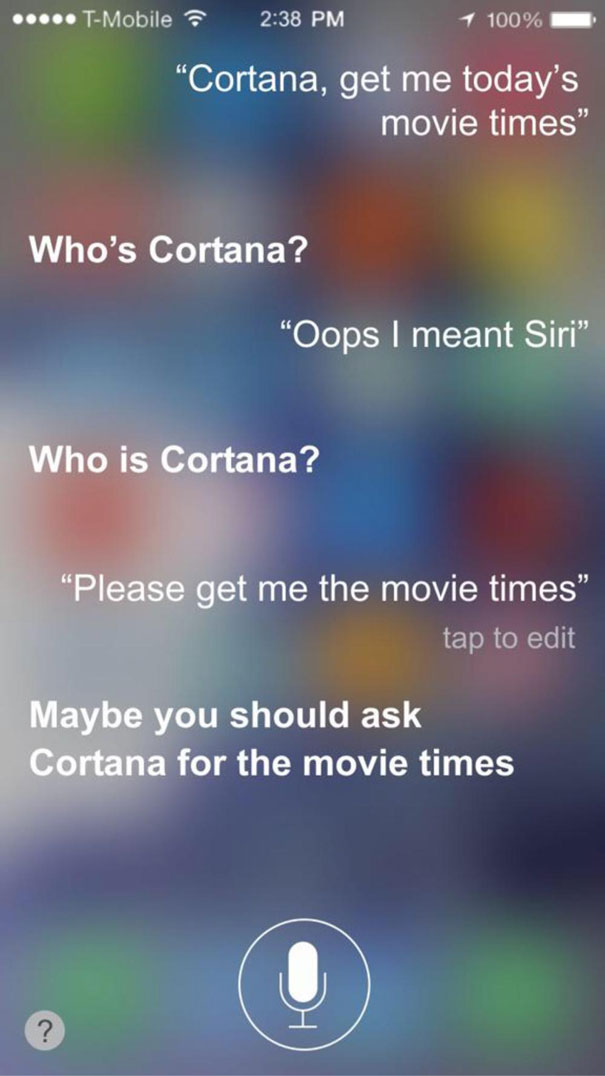

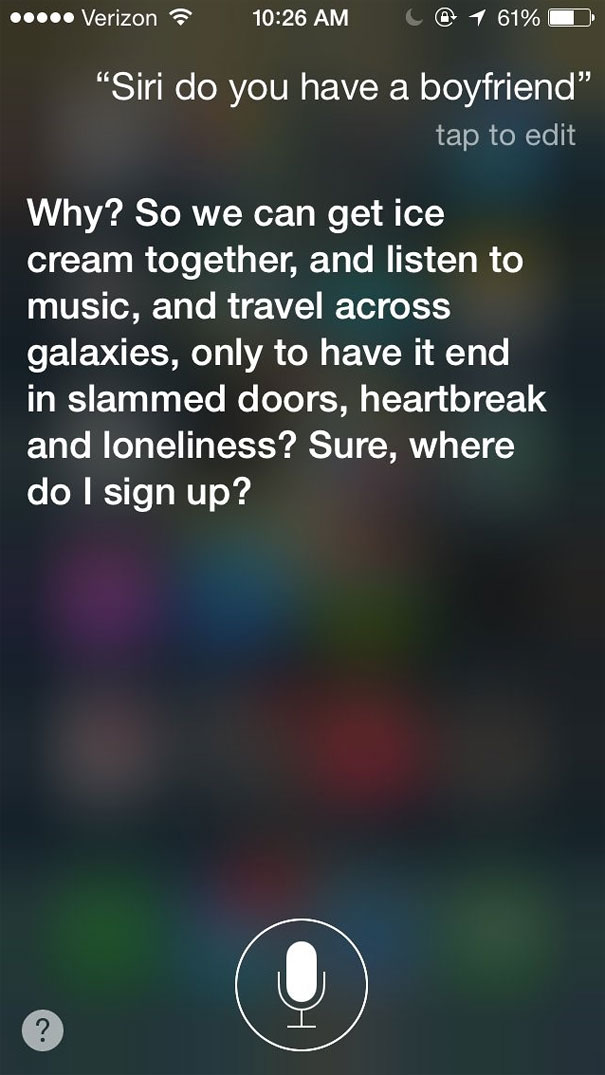

I have always found the use of these interesting, not only for the amount of information they contain, but also for discovering the funniest answers (like the ones in the images below). That’s why I find this paper so interesting. Applying all this AI knowledge to the world of music can be complex and at the same time super interesting.

In this paper they talk about COSMIC, a (COnverSational Interface for Human-AI MusIc Co-Creation). This bot not only responds appropriately to the user’s questions and comments, but it is also capable of generating a melody from what the user asks for. In the following video you can see an example (referenced in the same paper).

The complexity of these projects is always in the back of my mind. Knowing how devices are capable of reacting like humans seems to me to be a great advance that at the same time can be a bit alarming.

Still, this case opens up a lot of new opportunities. Using this same system, a new method of learning can even be generated. After all, this bot simply edits parameters of a melody (speed, pitch…) to resemble different emotions. One could therefore learn how different emotions tend to imply different sounds or speeds, or many other details.